Gundersen Health System Expands into the Chippewa Valley: A Commitment to Providing Quality Healthcare Services Closer to Home.

Gundersen Health System is interested in exploring opportunities in the Chippewa Valley region to provide care as close to home as possible for the communities it serves. The healthcare system…

FIDE World Chess Championship Match 2024: A Battle for Supremacy with China’s Ding Liren vs. India’s Gukesh D

The upcoming 2024 FIDE World Chess Championship Match promises to be an unforgettable event that will determine the chess world champion for the next two years. This prestigious tournament, which…

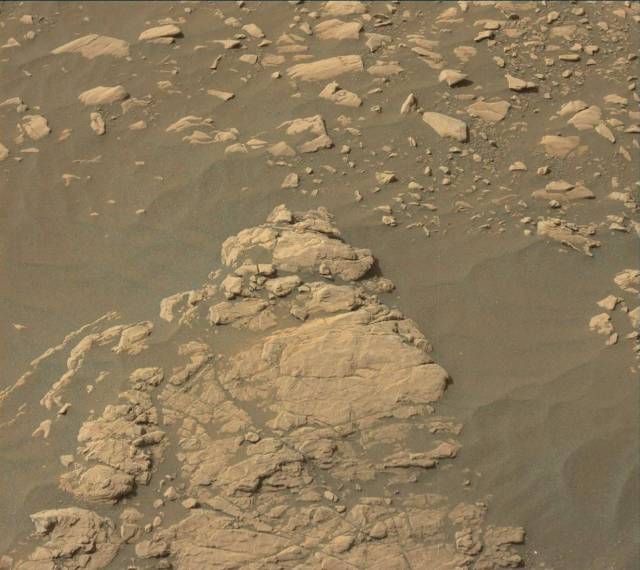

Unraveling the mysteries of Mars: A glimpse into the potential discoveries awaiting us at Aberlady

On Monday, we embarked on a short drive that led us to two potential drill targets. The prime candidate, known as “Aberlady,” showed striking similarities in color, structure, and texture…

Defense Budgets on the Rise: Countries Invest Heavily in Military Capabilities

The trend of increasing defense budgets has been on the rise for the past year, with countries all over the world investing heavily in their military capabilities. In Europe, countries…

Curiosity Rover Completes Final Tasks at Highfield Drill Site; Captures High-Resolution Images and Conducts Crucial Analyses

This week, the Curiosity rover completed its final tasks at the Highfield drill site. As a Surface Properties Scientist (SPS) on duty, I noticed that there were no new analyses…

Potassium-rich discovery at Broad Cairn: Scientists gear up for analysis on Mars

The science team at Hallaig wasted no time in their investigation of a potential drill target, “Broad Cairn,” located on a bright block within the clay-bearing unit. This spot promised…

Athletes File Legal Action Against Geolocation Tracking: A Case of Balancing Privacy and Crime Prevention in the United States

The issue of privacy remains a major concern in the United States, with strict regulations in place to prevent government intrusion. In Iowa, a group of 26 athletes has filed…

Curiosity Team Overcomes Obstacles on Sol 2256: Plans for Third Drilling Attempt on Mars

The team was hopeful for a productive day on Sol 2256, with plans to drill on Mars and collect science data. However, when they arrived at the workspace, they found…

Fight Disease and Boost Mood with These Anti-Inflammatory Foods

Eating certain foods can help improve mental health and reduce the risk of serious diseases like cardiovascular disease, diabetes, and cancer. Professor Howard E. LeWine from Harvard University, an expert…

SportingKC Prepares for Exciting Showdown with Minnesota United FC Despite Missing Key Defender Jake Davis’ Suspension

As part of their commitment to keeping fans informed about the health and well-being of their players, SportingKC.com publishes a Sports Medicine Report prior to each match. This report is…